Microsoft looks to improve Bing Maps by bringing new technology to developers

2 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team Read more

Microsoft released its new Bing Maps V8 control preview at Build 2016, taking another step forward towards improving this web mapping platform for developers. Four words best describe this version: more features and faster performance.

Let’s start with the features recently added. The company’s aim is to make business intelligence and data visualization richer and more interactive so that users can find locations more easily and get a clearer picture of them at the same time.

- READ ALSO: Microsoft revamps the Maps app for Windows 10 with build 14291

The list of new features includes:

-

Autosuggest – Provides suggestions dynamically as you type a location in a search box

-

Clustering – Visualize large sets of pushpins, by having overlapping pushpins group and ungroup automatically as users change zoom level.

-

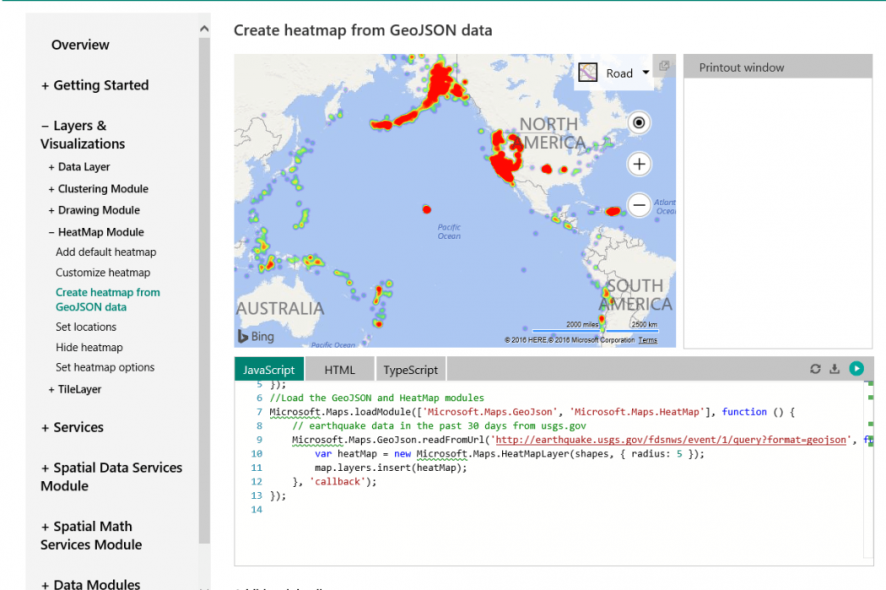

GeoJSON Support – Easily import and export GeoJSON data, one of the most common file formats used for sharing and storing spatial data.

-

Heatmaps – Visualize the density of data points as a heatmap.

-

Streetside imagery – Explore 360-degrees of street-level imagery.

-

Touch support – Easily navigate Bing Maps using a touch-screen device as well as mouse or keyboard.

-

Spatial Math module – Provides a large set of spatial math operations from calculating distances and areas, to performing boolean operations on shapes.

-

Administrative boundary data – easily access Bing Maps boundary data.

Performance has also been improved via the use of the HTML5 canvas, resulting in improved rendering performance compared to previous map controls. This allows users to view more data and gain deeper insights.

An annoying issue with Bing Maps has long been the search results for addresses outside the USA. Users have always complained that maps outside the USA are of bad quality and that search results are not accurate. What will be interesting to see is whether or not the Bing Maps V8 can address this issue. With Microsoft having recently updated Windows 10 Maps App with better search results for Insiders, it seems like the company is well on its way to do so. If this was possible for Windows 10 Maps, why shouldn’t it be the case for Bing Maps?

For more information about the Bing Maps V8 control preview, check out Microsoft’s page.

READ ALSO: Microsoft competing with Amazon for HERE Maps contract